Devcontainers – our embedded workflow!

Intro to the problem

Developers at GoodByte often work on multiple embedded projects simultaneously or in short succession – some projects last only one month, others two or three years. Because of this, a developer might switch between two projects in the same week or handle three projects in a quarter. Each project requires its own SDKs, toolchains, and specific configurations: ESP-IDF, Nordic’s nRF Connect SDK, TI’s Code Composer, STM32Cube, and so on. Every time someone switches a project, they need to set up a new environment: download SDKs, configure environment variables, install compilers, and solve version conflicts. It becomes a cycle of “Why does this not compile? Oh,wrong compiler version. Why does this flashing tool not see my device?”

This is not only frustrating, it also reduces productivity and increases cognitive load. Our goal was to let developers concentrate on coding in C/C++ (and sometimes Python scripts) rather than dealing with environment setup. In this article, we share our experience moving from local setups to server tools, then to Docker images, and finally to Dev Containers with GoodByte’s custom “features” and templates.

What we’ve tried?

We experimented with three main setups before settling on Dev Containers:

- Local Setups

- What: Each developer installs vendor SDKs (ESP-IDF, nRF Connect, STM32Cube, etc.) on their machine and configures environment variables manually.

- Pitfalls: Versions diverged (“Oops, I still have GCC 9 installed”), leftover variables from previous projects broke builds, and SDK updates happened inconsistently, leading to “works on my machine” issues.

- Server-Hosted Tools

- What: A central server holds approved toolchains in versioned folders. Developers copy the server locally to have a faster build process.

- Pitfalls: Only a few people could push updates – urgent fixes often got delayed. And CI tools had some troubles to synchronize with the server content. It was hard to keep the CI build environment clean at the start. It needed, either download the whole server or have a cached version. None was efficient and safe.

- Project-Specific Docker Images

- What: Each project uses a Dockerfile bundling the exact GCC toolchain, SDK at a fixed version, Python venv, and build tools. Developers run the container with

-v $PWD:/workspaceto work inside it. - Pitfall: Dockerfile contents could not be reused across projects without copy-pasting and manual edits – every project required its own near-duplicate Dockerfile.

- What: Each project uses a Dockerfile bundling the exact GCC toolchain, SDK at a fixed version, Python venv, and build tools. Developers run the container with

By understanding these limitations – version drift, network and performance bottlenecks, and Docker quirks – we recognized that Dev Containers address most pain points with a reproducible, IDE-integrated environment.

Dev Containers – what’s that?

Dev Containers is a way of combining Docker’s reproducible environment with the convenience of a full-featured IDE. Instead of manually running docker build and docker run each time – or fighting with mounts and USB passthrough – Dev Containers let you define a small configuration that tells your IDE:

- How to build (or pull) a Docker image using a Dockerfile or a Docker Compose file.

- Which extensions to install inside that container, so IntelliSense, code completion, and debugging work correctly.

- How to attach the editor, terminal, and debugger directly into the running container, making the container feel like your local environment.

Anatomy of a Dev Containers

Typically, your project will have a .devcontainer/ folder containing two key files:

Dockerfile(ordocker-compose.yml): Instructions for building the image – what base OS, what toolchains, what Python packages, etc.devcontainer.json: A small JSON file that tells which Dockerfile or Compose service to use, which folder becomes the workspace, which extensions to install etc.

Moreover, Dev Containers let people reuse units of configurations created by others. They call it Dev Containers Features. Each Dev Containers configuration can be wrapped and can be used and deployed as-is into your workspace. Such mechanism is called Dev Container Templates. We’re going to show in a moment what they can bring to a table.

Why Dev Containers?

But first, let’s take a look at some advantages we can notice while using this approach:

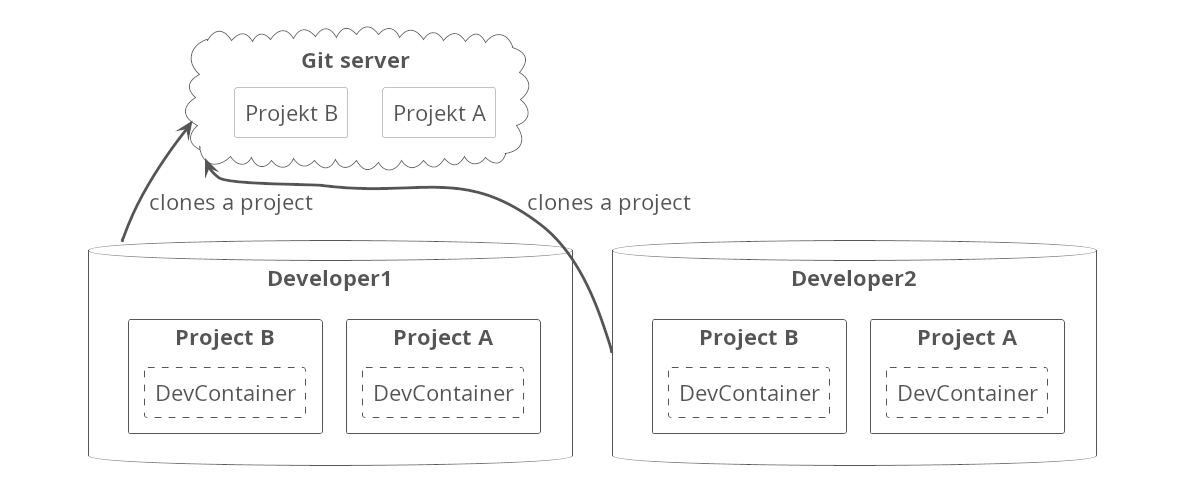

- Minimal Onboarding Time – new developers clone the repo, open in their IDE, and the container builds itself. No need to manually install SDKs, setup Python venvs, or configure environment variables.

- Consistent Environment – Everyone uses the exact same OS version, toolchain, and Python packages. No more “works on my laptop but not on yours.”

- CI/CD Alignment – you can use the same container image in your CI pipeline (GitHub Actions, GitLab CI, Jenkins). That means “it works locally” truly means “it works in CI” as well.

In short, Dev Containers solve the biggest pain points of local or server-based setups by providing a reproducible, IDE-integrated, and cross-platform way to work. Next, we’ll see how to apply Dev Containers specifically for Nordic + Zephyr projects.

Dev Containers with Nordic + Zephyr

Zephyr-based projects (common when using Nordic’s nRF Connect SDK) involve multi-repo tooling (west), overlay workspaces, and complex device trees. Below is our recommended way of configuring.

Let assume, you have a very simple Zephyr project that only blinks a LED. You have only one main.cpp file in app/src and CMakeLists.txt one level up. How to quickly setup it e.g. in VsCode?

Ctrl + Shift + p- Select

Dev Containers: Reopen in Container Add configuration to workspace- Paste the following link

ghcr.io/goodbyte-software/template-example/nordic:0.3and pressEnter - Do not choose any additional features and press

OK

What’s happening now? We’ve just used a Dev Container template. You can spot a .devcontainer directory in your workspace and a west.yml file in the app directory. You can now build the directory by west build --board nrf52dk/nrf52832 app and even flash using west flash if you have a proper board.

How did it happen? Well, while applying a template, the following file is downloaded and used as a recipe for a development container:

{

"image": "ubuntu:22.04",

"name": "zephyr",

"remoteUser": "dev",

"forwardPorts": [

8001

],

"features": {

"ghcr.io/goodbyte-software/goodbyte-features/ncs-toolchain:latest": {

"version": "0.17.4",

"architecture": "arm"

},

"ghcr.io/goodbyte-software/goodbyte-features/codechecker:latest": {},

"ghcr.io/goodbyte-software/goodbyte-features/jlink:latest": {

"version": "8.60"

}

},

"mounts": [

"source=.,target=/repo,type=bind",

"source=/dev,target=/dev,type=bind"

],

"onCreateCommand": "bash -i /repo/.devcontainer/onCreateCommand.sh",

"workspaceFolder": "/repo",

"remoteEnv": {

"LC_ALL": "C"

},

"shutdownAction": "stopContainer",

"runArgs": [

"--privileged"

],

"customizations": {

"vscode": {

"extensions": [

"nordic-semiconductor.nrf-connect-extension-pack",

"llvm-vs-code-extensions.vscode-clangd"

]

}

}

}Code language: JSON / JSON with Comments (json)Basically, in the features section, there are all dependencies that are needed to build the project and flash your board. What’s important in case of new projects, you do it only once at the start and then keep these additional files in your version control system. Thanks to that, the development environment for newcomers works out of the box.

Everything described above is presented in the video guide below.

Dev Containers with STM32

Okay… but what if we wanted to work on a project that is based on a chip from ST Microelectronics? Or basically any non-Zephyr project with an ARM-based chip on the board. In the previous example, you could notice downloading a .devcontainer/devcontainer.json file. Let’s then create our own for the sake of this section.

Below, you can find a file that is adjusted with such projects. What really differs here is the set of features. Previously we had goodbyte-features/ncs-toolchain feature that contains a nRF Connect SDK together with needed toolchain. As of now, it’s not needed anymore, yet we need something that we can compile and debug our project with. Luckily, we prepared a feature that contains a gcc-arm-none-eabi package. So the final file, would look like below:

{

"image": "ubuntu:22.04",

"name": "arm",

"remoteUser": "dev",

"features": {

"ghcr.io/goodbyte-software/goodbyte-features/arm-none-eabi-gcc:latest": {},

"ghcr.io/goodbyte-software/goodbyte-features/dev-dependencies:latest": {},

"ghcr.io/goodbyte-software/goodbyte-features/jlink:latest": {

"version": "7.94e"

},

"ghcr.io/devcontainers/features/python:1": {

"version": "3.10"

}

},

"mounts": [

"source=.,target=/repo,type=bind",

"source=/dev,target=/dev,type=bind"

],

"workspaceFolder": "/repo",

"remoteEnv": {

"LC_ALL": "C"

},

"shutdownAction": "stopContainer",

"runArgs": [

"--privileged"

]

}Code language: JSON / JSON with Comments (json)Of course, it may happen that there’s no plug-and-play component that you can use to start development in your project, as we have used here some features. In that case, you are able to connect any Dockerfile that suits you. E.g. you can do connect that using a docker-compose like this:

{

"dockerComposeFile": ["./docker-compose.yml"],

"service": "dev_container",

"workspaceFolder": "/repos/${localWorkspaceFolderBasename}",

"shutdownAction": "stopCompose",

}Code language: JSON / JSON with Comments (json)Why to do that?

Other than a consistent development environment across the team, it brings one super useful feature when it comes to the development process. Namely, once having the configuration that any developer can use either in IDE or CLI, CI worker is a next user that can build your project running the very same container as you. Look at example GitHub actions configuration:

name: 'build'

on:

push:

branches: [main]

pull_request:

branches: [main]

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v4

with:

submodules: 'recursive'

- name: Build and run dev container commands

uses: devcontainers/ci@v0.3

with:

runCmd: cmake -B build -S . && cmake --build build

Code language: JavaScript (javascript)Summary

Developers at GoodByte faced environmental volatility as they switched between projects with different SDKs and toolchains. Local setups led to version drift and “works on my machine” problems; server-hosted tools introduced network bottlenecks and update delays; standalone Docker images meant copy-pasted Dockerfiles and long rebuilds. Dev Containers combine the best of both worlds: a single, version-controlled JSON configuration that builds (or pulls) a Docker image, installs the right VS Code extensions, and attaches the editor and debugger directly into the container. The result is minimal onboarding, a rock-solid, reproducible environment across all platforms, and seamless CI/CD integration – so teams can focus on code, not setup.